What is DLSS and how does this technology improve gaming performance?

One of the main technological trends in the gaming industry today is enhancing graphics without sacrificing performance. Different companies approach this differently. NVIDIA adds special computational blocks to their graphics cards and enhances images using neural networks. AMD takes a more conservative approach, utilizing universal algorithms available on all graphics cards.

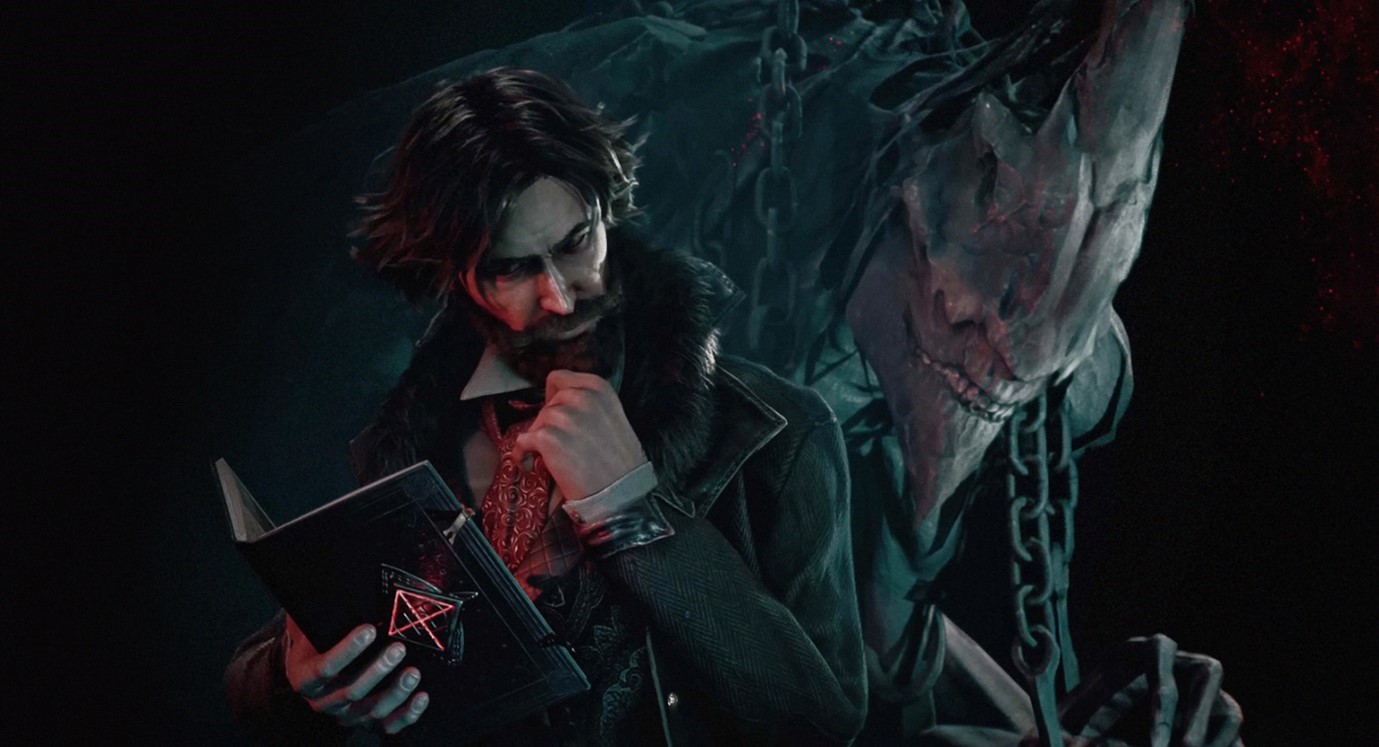

Today, upscaling technologies like DLSS have become the norm for current games. Moreover, some developers openly admit to relying on such systems during game development. For example, the creators of Remnant 2 confessed before its release that they were designing the game with upscaling in mind. Because of this, players began to worry that the graphics were becoming increasingly artificial, and developers were struggling to optimize their projects.

DLSS is precisely one of the options for upscaling. Upscaling itself is the process of increasing the resolution of the original image. You may have noticed that when DLSS is enabled in games, anti-aliasing is automatically disabled. This is not without reason, as anti-aliasing is already the basis of all modern upscaling algorithms. To better understand how this all works and is interconnected, let's delve into the workings of smoothing methods.

In some modern games, achieving a high frame rate at high resolutions is nearly impossible without DLSS or FSR.

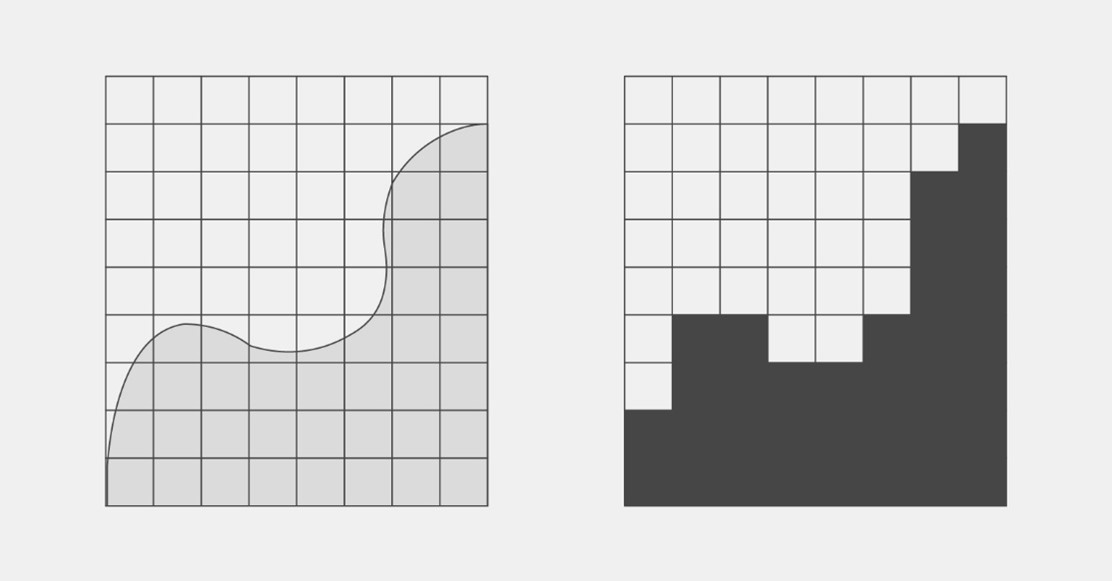

Smoothing is necessary to rid the image of aliasing, commonly known as "jaggies" around object edges. They inevitably form because the pixels of the monitor have a square shape, and achieving a perfectly smooth object shape with them is not possible. This effect can be minimized by filling the space between contrasting pixels with intermediate colors. For example, placing gray pixels between black and white ones.

Monitor pixels are discrete — you can't light up only half of a square. This creates aliasing when trying to display a smooth object on the screen. One could crudely increase the pixel density to the point where the "jaggies" are simply not noticeable. However, even today, this is too resource-intensive, expensive, and unrealistic. Hence, developers visually "blur" angular areas.

The simplest way to eliminate aliasing is to "blur" all contrasting areas of the image with intermediate colors. Such smoothing algorithms are called post-processing. Some of them, like FXAA, can still be found in games. Their main advantage is low system load, as there's almost no need for additional image processing. Because of this, the frame rate hardly drops.

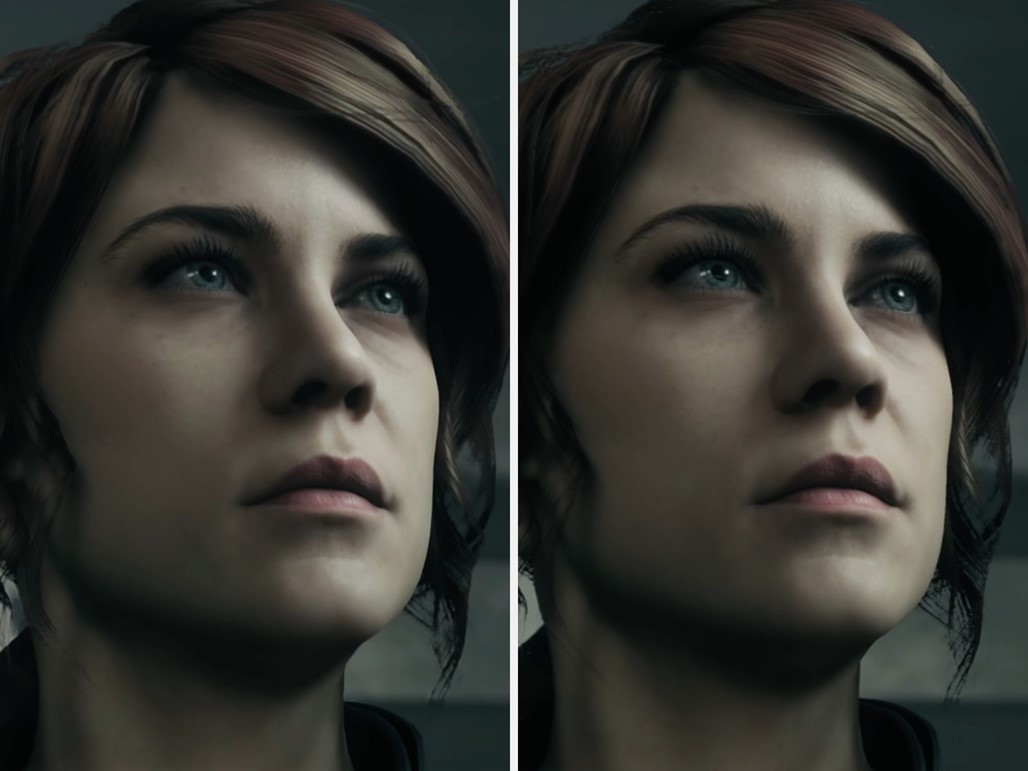

Example of FXAA technology in action (first image without it, second image with it). The scene with smoothing has rid itself of "jaggies" but has become more blurry.

But such efficiency comes at the cost of quality. Post-processing types of smoothing inevitably blur the entire image, making it blurry. For a higher quality result, the algorithm needs information about scene nuances: the positioning of objects, their distances from each other, and so on.

Some types of post-processing smoothing are sophisticated. MLAA, for instance, smooths object edges depending on the shape they form. Thus, the system ignores a straight contrasting line, resulting in less blurriness on the screen. This doesn't guarantee high image quality, but in some cases, it looks better than FXAA.

The easiest way to achieve smoothing is by processing the scene in excessive resolution — this is called multisampling. One could straightforwardly increase the resolution of the entire scene at once — this is how SSAA works. In this case, the algorithm would know what's located at object edges and would properly smooth them. It's a good but very resource-intensive method, as the graphics card processes the image in higher resolution.

SSAA is hardly found in games today. It has been replaced by other NVIDIA algorithms: DSR and DLDSR. They operate on the same principle, but tensor cores are used to relieve the system. Because of this, the frame rate in the game doesn't drop as much.

There are more advanced systems as well. For example, MSAA pre-determines object boundaries and renders them at increased resolution only. As a result, the algorithm effectively smooths individual parts of the scene but consumes fewer graphics card resources. However, MSAA doesn't interact with pixels inside objects, making it imperfect as well.

DLSS 2.0 is the second iteration of DLSS (Deep Learning Super Sampling).

Now it can be confidently said that the first iteration of DLSS was a dead-end option that couldn't make the image detailed. Everything changed with the introduction of DLSS 2.0. The algorithm was then refined into the form we know today. Instead of individually smoothing and upscaling each frame of the game, the system combines several previous images to merge them into one high-quality image.

The neural network still handles the optimal way of applying and getting rid of inevitable graphical artifacts. In other words, DLSS 2.0 has become a fully-fledged technologically advanced counterpart to TAAU. This approach has a significant advantage: the system doesn't have to make up information, as it is indeed contained in different low-resolution frames.

Furthermore, with DLSS 2.0, NVIDIA began using a universal and deeper neural network — it doesn't need to be trained for each game individually. This has made the technology much more attractive for developers, as well as higher in quality. The number of games supporting it has increased rapidly, and the quality of upscaling sometimes rivals that of native resolution images.